It’s been a few weeks since we hosted the 22nd Athens Kubernetes Meetup in Greece, and we were very excited to present our new enterprise cluster to a wider audience.

This article aims to share our thought process, the challenges we encountered, and how we overcame them to build a solution that stands as a testament to our commitment to innovation and excellence. By sharing our story, we hope to inspire others in the industry to explore new possibilities and to think critically about how technology can be harnessed to solve complex problems. Our journey with CELL is just beginning, and we’re excited about the future possibilities it holds for Vodafone Greece.

Modernising Vodafone Greece

Vodafone Greece has been on a mission to boost its tech infrastructure over the past few years.

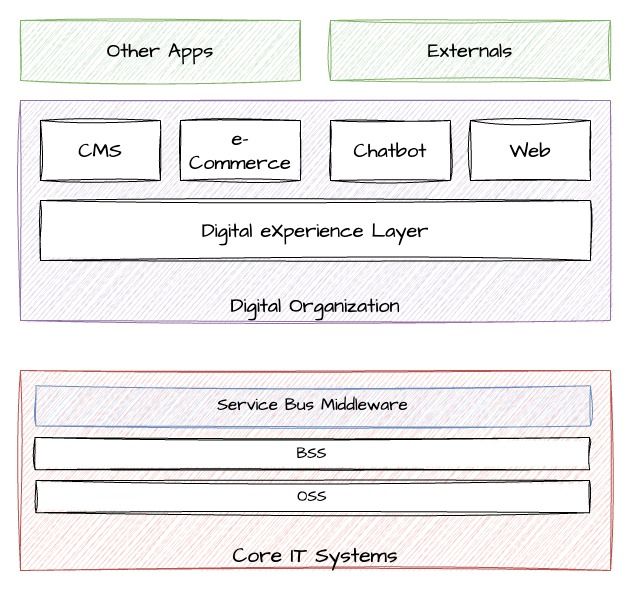

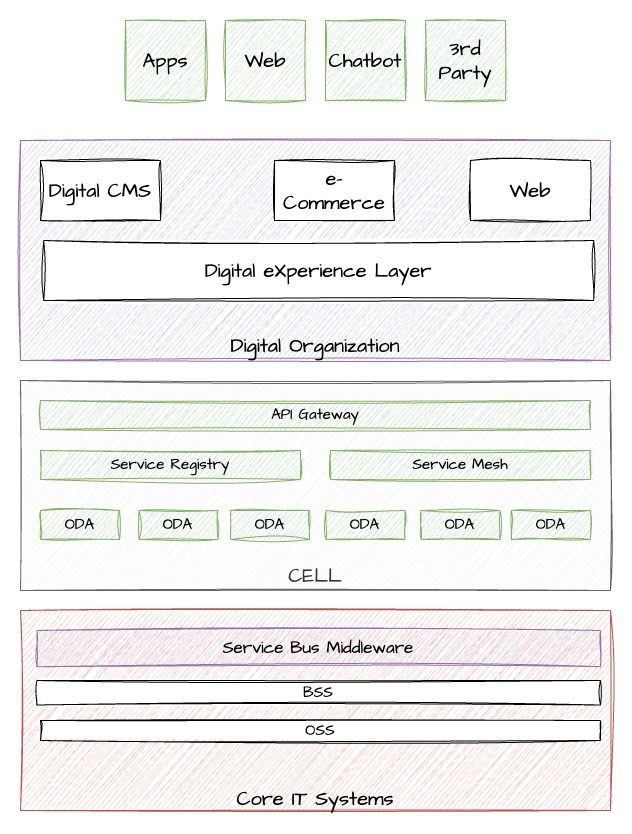

At the heart of our day-to-day operations are the CoreIT systems, home to the essential Operational and Business Support Systems. This area is expansive, filled with various CRMs, payment systems, and service buses tailored to different needs. The databases here are all about relational data, storing the crucial bits that keep our company ticking and holding onto our customer info and statuses.

Vodafone’s story isn’t a short one. With a history stretching back over 30 years, marked by a series of mergers and acquisitions, we’ve gathered a rich tapestry of technologies, solutions, and, inevitably, some challenges—especially within our CoreIT environment. Adding to this complexity is our physical presence, with stores spread out across the country, each equipped with their tools for serving customers. It might be a surprise, but our CoreIT world is quite the behemoth, hosting over 150 different systems and applications.

So, how do you start to change something as intricate as this? And how do you stay aligned with the fast-paced demands of the industry and our customers, who now expect nearly instant service?

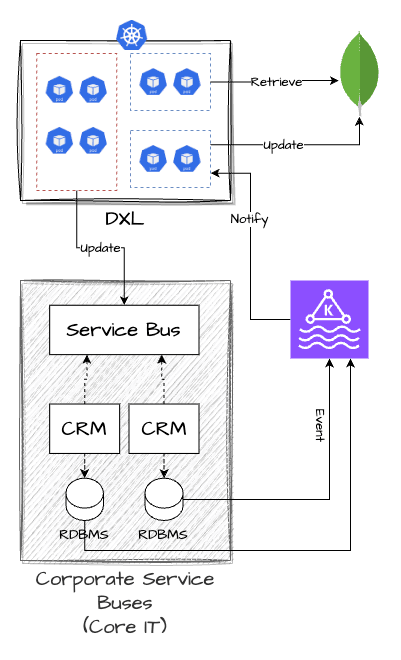

The DXL

Six years ago, Vodafone Greece embarked on a modernization journey, with the Digital division leading the charge toward a new digital era through the creation of the Digital eXperience Layer (DXL). This microservices cluster, embodying our years of technological exploration and innovation, utilizes the CQRS pattern to efficiently connect our Corporate Backend Systems with a new suite of microservices, optimizing data flow and storage with MongoDB. As DXL has matured into a vital digital middleware consisting of 200 microservices, it has significantly reduced backend system strain, mitigated overload risks, and introduced modern development practices along with a mobile-friendly REST API, thus elevating the user experience on our digital platforms. For a deeper understanding of DXL’s impact, explore further here.

Scaling the Digital eXperience Layer (DXL) at Vodafone Greece, in terms of both technical responsibilities and team management, revealed challenges. As DXL grew, we realized these challenges stemmed from the complex world of large enterprises like Vodafone.

This realization led us to understand that traditional microservices clusters need adjustments to handle the complexities inherent in a multinational corporation’s environment effectively.

Issues with traditional microservices clusters

Microservices are supposed to help big organizations scale- have independent teams working together on the same cluster. But the road is not always easy. Remember, Vodafone Greece is a very complex organization both in terms of size and processes.

Release Management & Conflicts

Managing a large microservices cluster, such as the one employed by Vodafone Greece, introduces intricate challenges, particularly in the realm of release management. The coexistence of multiple teams working on a shared platform leads to inevitable release collisions. This complexity is increased when certain microservices, essential across different customer journeys, require simultaneous updates by teams with divergent priorities and timelines. Such scenarios complicate our branching strategies and release schedules, creating a delicate balancing act.

Our projects’ mix of agile and traditional waterfall methodologies further complicates synchronization. Waterfall projects, with their rigid timelines, must align with the agile-paced updates of the Digital Experience Layer (DXL), putting additional pressure on our release management processes. This alignment challenge can cause bottlenecks, affecting the smooth delivery of updates.

These operational problems have several implications for software delivery teams. Firstly, they introduce significant overhead in managing dependencies and coordinating releases, leading to potential delays in time to market—a critical factor in today’s fast-paced environment. Secondly, they pose quality assurance challenges, as ensuring the integrity of releases becomes more complex with the increasing number of interdependencies. Lastly, the strain on resources can divert attention from innovation, impacting the overall growth and competitiveness of the organization.

Operational Technical Configuration / Manual Operations

At Vodafone, launching an API involves collaboration across various teams spread out over different countries, showcasing the complexity of managing a large tech infrastructure like the DXL. The process requires tight coordination between the team responsible for the API Gateway and the microservice development team.

This necessitates a clear agreement on the API specifications early in the process and constant communication for any changes, introducing potential delays that affect both API Engineers and Solution Architects, who must navigate these cross-team communications efficiently.

CELL

Designing CELL

CELL - Stands for Componentized Enterprise Logical Layer. For the sake of brevity, we will call it CELL from now on.

Around 2022, Vodafone Greece decided to modernize the Core IT systems and invest in microservices. The goal was to replicate the successes of the Digital Experience Layer (DXL) while also refining the approach based on lessons learned from the initial foray into microservices. Considering the depth of CoreIT’s complexity and the myriad of teams involved, we knew this task would be anything but straightforward. The vision for the new CoreIT cluster was not to merely create another middleware layer but to build a more comprehensive solution that, in certain scenarios, could fulfill middleware functions and beyond.

To address the challenges identified in the DXL’s implementation and to lay a foundation for further modernization, Vodafone Greece turned to the Open Digital Architecture proposed by TMForum.

TMForum’s “Open Digital Architecture (ODA) is a standardized cloud-native enterprise architecture blueprint for all elements of the industry from Communication Service Providers (CSPs), through suppliers to system integrators”

This framework is particularly focused on leveraging microservices to facilitate cloudification, covering all critical aspects of the technology lifecycle, though Vodafone Greece was primarily interested in the “Deployment and Runtime” components of the blueprint.

By adopting ODA, Vodafone Greece aimed to solve not only the immediate technical and operational challenges but also to establish a scalable and flexible architecture for the future.

We named the result of this operation “CELL” - (Componentized Enterprise Logical Layer.

As a microservices layer, CELL is designed to modernize existing monolithic structures, aiming to become the primary platform for delivering projects and features. Its design goes beyond the traditional middleware role, positioning it as a main system within the technological ecosystem.

The innovation behind CELL lies in its approach to fostering efficiency and agility:

- It facilitates team independence by decoupling team operations, enabling parallel progress without dependencies.

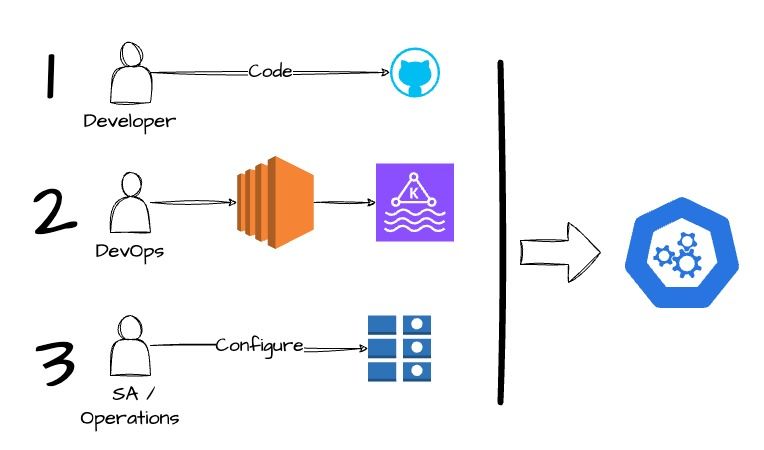

- It separates environmental considerations from software development through spec-driven deployments and automated infrastructure configuration, streamlining the development cycle.

- By addressing these areas, CELL aims to eliminate common bottlenecks faced by Solution Architects, DevOps teams, and developers, thereby enhancing the overall productivity and speed of service delivery at Vodafone Greece.

Under the hood

Topology

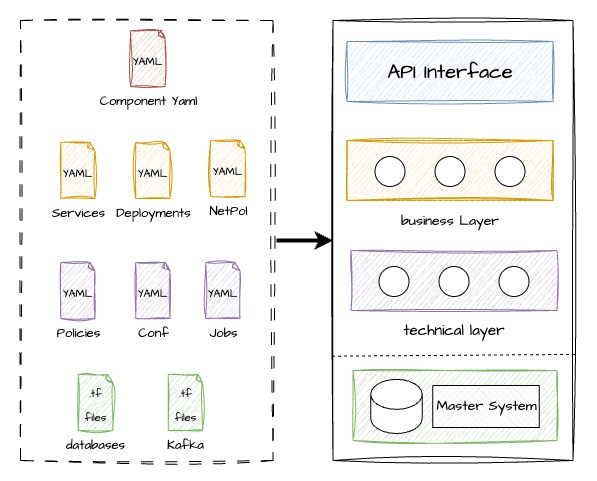

A high-level topology of CELL can be viewed below.

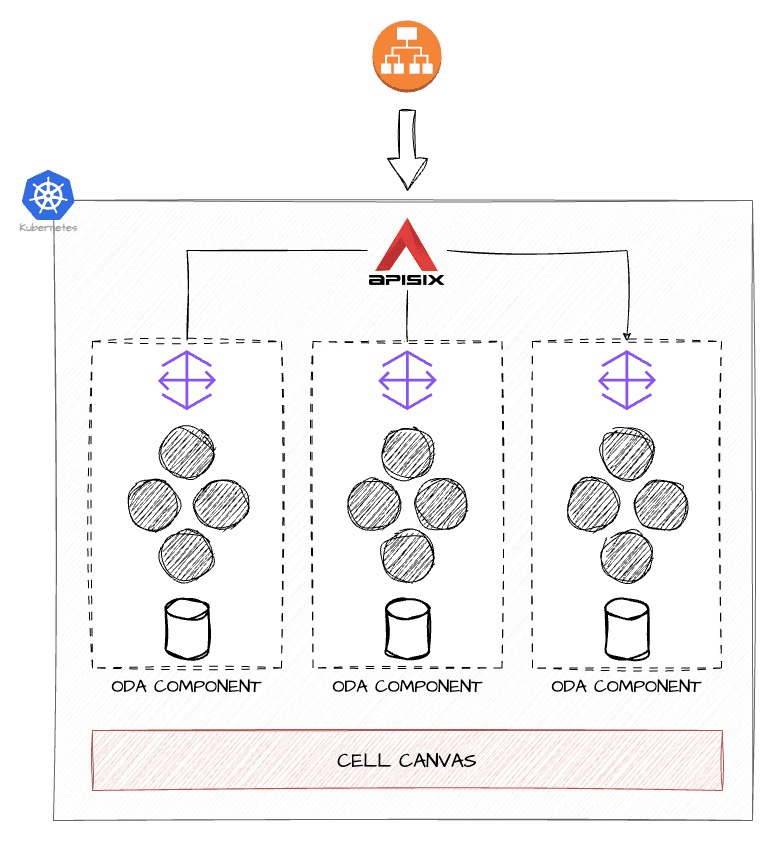

We utilize terraform fully for infrastructure orchestration, ArgoCD for application deployment within Kubernetes. This enables us to fully adopt a declarative approach for both Infrastructure and Applications, ensuring consistency, scalability, and ease of management across the board.

At the gateway to the cluster stands the Apisix Ingress Controller, intricately paired with a Keycloak installation to bolster security through OpenID Connect authentication. This setup not only facilitates secure access but also streamlines authentication across CELL, allowing us to have a declarative approach to implementing authentication and authorization using Kubernetes resources (ApisixRoute, ApisixClusterConfig, ApisixPluginConfig, etc ) while still maintaining the full feature set of the Apisix Gateway by utilizing specific Apisix Features.

For microservices development within CELL, we continue to leverage Quarkus, a choice informed by our successful experiences with the DXL. The ODA Architecture’s recommendation for utilizing Kubernetes Operators aligned well with our direction, prompting us to develop our own Kubernetes operators. While Golang was a strong contender, our team’s proficiency in Java and Quarkus’ native compilation capabilities, coupled with the Java Operator SDK’s testing advantages and rich features, made it the optimal choice for us.

What sets CELL apart from our other Kubernetes clusters is the introduction of the CELL / ODA Component, along with its integration into the CELL Canvas - our tailored adaptation of the ODA Canvas Blueprint. We will delve into this core concept in a short while.

Componentisation and Release Management with CELL

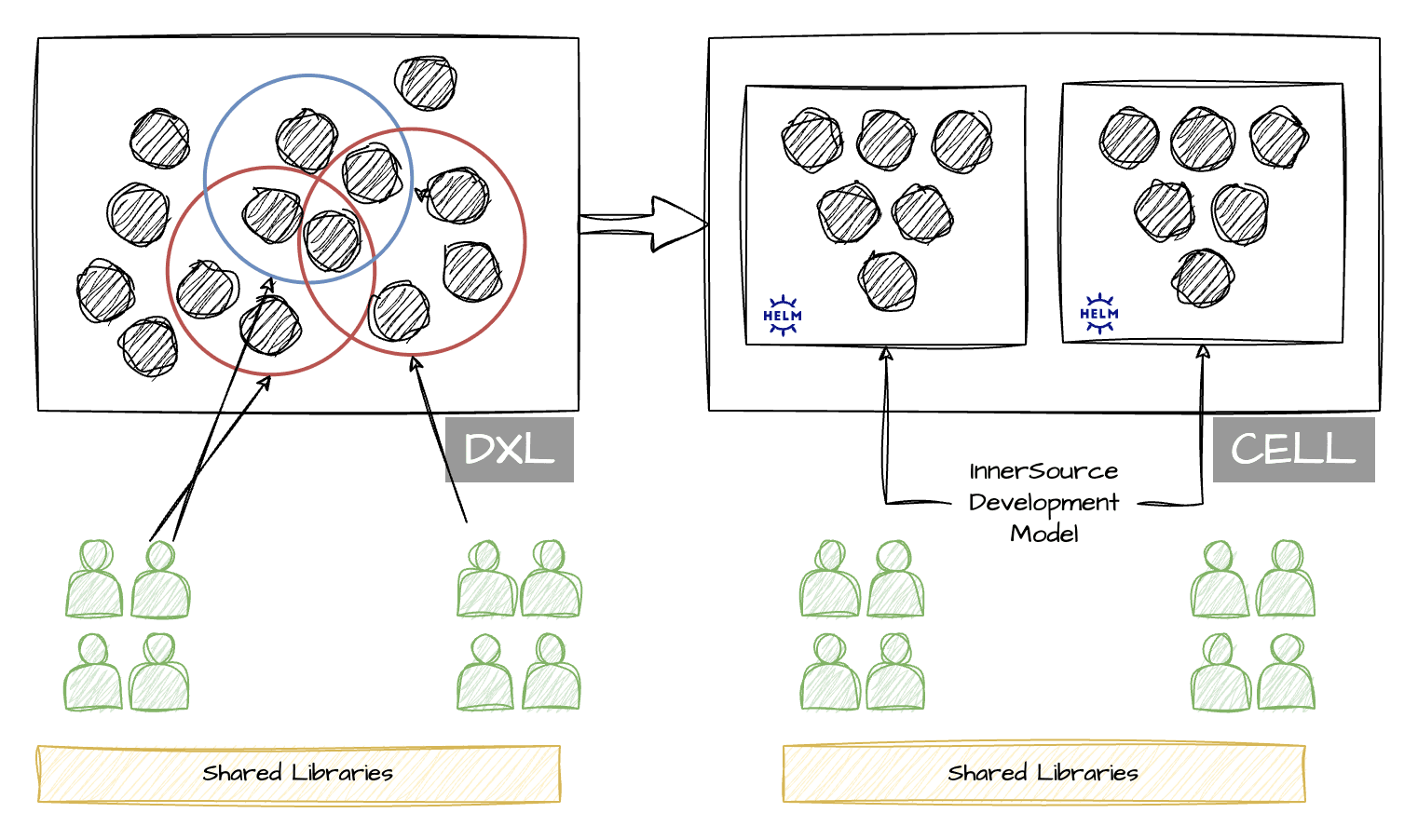

The ODA architecture is bundling microservices with common responsibility under components deployed with Helm Charts. This is in stark contrast with the way DXL handled reusability. In DXL, some core microservices were being reused among many journeys. Although reusability was maximized that way, problems arose with multiple teams trying to plan and make changes to those microservices - and this problem was becoming even bigger considering that 16 teams were working on the microservices of the DXL cluster.

With CELL, we are bundling microservices together. In the case where there are microservices that need to be reused across components, they are updated using the InnerSource collaboration model, and the new versions are installed with the new components. Therefore, the source of truth for each microservice is always one, but the instances inside K8s can be many. The small sacrifice in resource reusability (since some microservices will be instantiated more than once) comes with great cost-efficiency, since the conflicts between the teams are minimized, and project delays will be avoided.

CELL Canvas, Components & Microservices

ODA /CELL Components

At the heart of CELL, there is the ODA / CELL Component - a key feature of CELL, and the ODA Architecture in general. ODA Components are to CELL what molecules are to physics.

A CELL component (modeled and adapted from the ODA component blueprint) is the smallest structure inside the cluster that can expose a REST API toward the API Gateway. The microservices inside the CELL Component work together to make this happen. If CELL Components are the molecules, then microservices inside a CELL component are the Atoms.

The deployment of CELL components within our Kubernetes infrastructure is standardized using Helm charts. This method ensures consistency, scalability, and the seamless integration of Kubernetes resources essential for the operation of these components. Among the various resources deployed, the ODA Component YAML stands out as a crucial element. This YAML file, a Custom Resource (CR) within Kubernetes, is pivotal for the deployment of each ODA component.

The ODA Component YAML is more than just a configuration file. It’s an adaptation of the official ODA (Open Digital Architecture) Component Specification, tailored to meet the unique requirements of our ecosystem. This specification acts as a “manifest file,” detailing critical aspects of an ODA component such as:

- API Exposure: Defines how the component’s APIs are exposed to other services or applications.

- Authentication Methods: Specifies the authentication mechanisms required to interact with the component.

- Authorization Requirements: Outlines the authorization protocols to ensure secure access.

- Subscribed and Published Events: Lists the events the component subscribes to and publishes, facilitating event-driven architecture.

Each CELL Component Helm Chart comes with an ODA Component YAML, detailing how the cluster should interact with it. This includes integration points, dependencies, and operational parameters that are essential for the component’s functionality within the broader architecture.

What sets the ODA Component YAML apart is its nature as a Custom Resource in Kubernetes. This distinction means it’s not just a passive configuration file but a dynamic element that Kubernetes itself can interpret and act upon. This capability is crucial for the CELL (referred to as ODA Canvas), as it allows for automated, intelligent orchestration and management of ODA components based on the specifications defined in the YAML files.

ODA / CELL Canvas

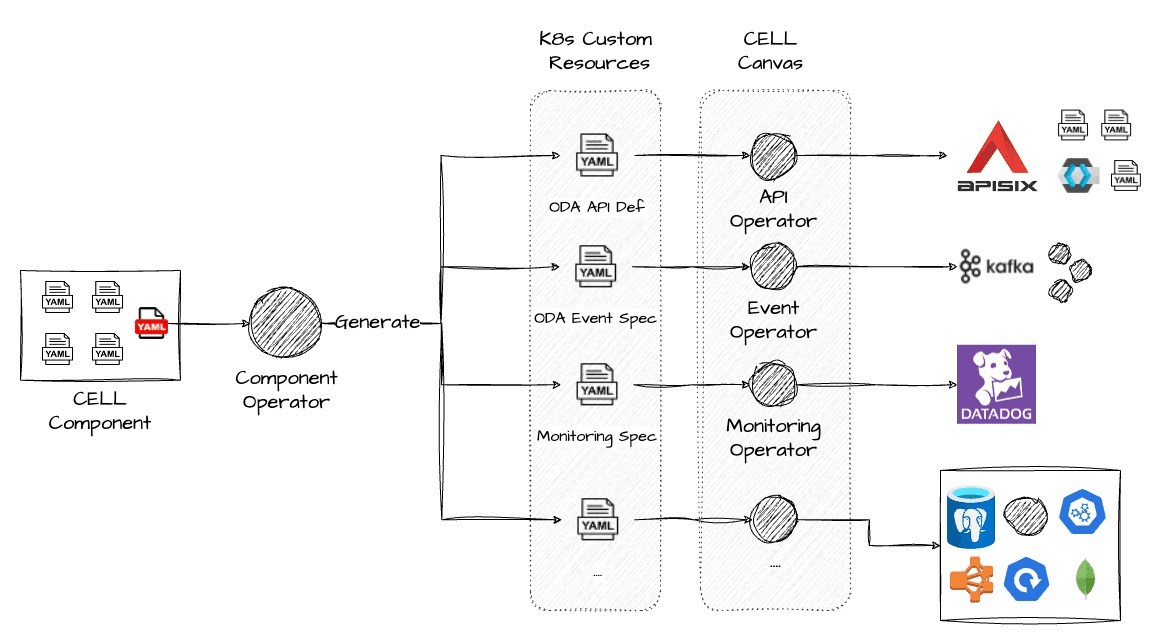

The point of defining and using Custom Resources in Kubernetes is to use them with Kubernetes Operators. Kubernetes Operators are agents inside the cluster that communicate with the Kubernetes API. They allow us to customize the environment and perform automated tasks in response to status changes of our Custom Resources.

CELL Canvas (an adaptation of the ODA Canvas Blueprint) is a collection of Kubernetes Operators that manipulate Custom Resources dedicated to the ODA Specification so that they can automate many of the tasks that would otherwise be done manually.

Upon the deployment of a CELL Component, the Component Operator takes action, detecting the deployment event and subsequently generating various Custom sub-resources critical for the component’s operation, such as the ODA api Specification and the SubscribedEvent specification. This automated response streamlines the integration process within our Kubernetes environment, ensuring that each component is seamlessly integrated into the existing infrastructure without manual intervention.

The cascade of automation doesn’t stop there; secondary operators are activated by these new sub-resources, taking charge of configuring essential subsystems. In our case, those are the Apisix Gateway and Keycloak for identity and access management (IAM). This setup automates the previously labor-intensive tasks of updating the API Gateway and Keycloak for new API exposures, significantly reducing manual configuration efforts and enhancing the efficiency and reliability of our system’s expansion and maintenance.

Results & Benefits

Following the deployment of the CELL Canvas, we immediately noticed a significant boost in productivity along with a substantial reduction in costs, previously done through manual operations.

Manual tasks, such as the development work for each API we planned to expose, have been completely eradicated. The API operator now swiftly manages API configurations, eliminating the need for manual intervention.

Furthermore, the necessity to maintain a separate archive of exposed services is a thing of the past, as our Kubernetes operator automatically updates the gateway. An operation that traditionally took up to two weeks to complete—factoring in external team communications and development—now unfolds in a mere 3 seconds.

Cluster Scaling: The deployment flow of our cluster incorporates a seamless integration of tools and processes to enable efficient scaling and testing environments:

- Infrastructure Deployment: Utilizing Terraform, we deploy the infrastructure components—including EKS, API Gateway, and Identity Provider—from top to bottom.

- Application Deployment: ArgoCD takes over to deploy the CELL Canvas, followed by the CELL Components, setting the stage for application and service configuration.

- Configuration and Integration: The CELL Canvas then configures the infrastructure to seamlessly integrate with the environment, ensuring all components communicate effectively.

This streamlined process has revolutionized how we create ephemeral testing environments. Now, whenever there’s a need for a cluster replica to conduct regression tests, gear up for a major production release, or any similar requirement, we can quickly spawn an entire replica of our cluster. This process takes just 16 minutes to complete, including deploying business applications and ensuring they’re properly exposed in the API Gateway.

This efficiency stands in contrast to our previous experiences with microservices clusters, where maintaining multiple environments during office hours was the norm. Our new approach not only saves time but also significantly reduces the overhead associated with managing these environments, marking a significant step forward in our operational capabilities.

Reusability is a cornerstone of an ODA cluster, distinctly separating infrastructural and business concerns. This means our microservices are developed without needing to know details about their deployment environment, including logging/telemetry, authentication, and authorization mechanisms. This architecture enables microservices to be shared across different clusters effortlessly, ensuring compatibility and functionality without concern for the specific environment

With a functioning ODA canvas present in any cluster, a microservice and its accompanying Helm charts can be deployed to a new cluster and will “Just Work.” There’s no need for additional configuration, as the ODA Canvas in the target cluster manages all necessary setup, API exposure, and deployment tasks required by the component. This process exemplifies the efficiency and flexibility our CELL framework offers, streamlining deployments across diverse environments with minimal overhead.

Conclusion

Writing this article took more time than anticipated, yet it feels that we’ve only begun to scratch the surface of what CELL brings to Vodafone Greece.

Vodafone Greece’s journey through modernizing its IT infrastructure with the Digital eXperience Layer (DXL) and the subsequent development of the CELL platform represents a significant leap toward addressing the complexities of operating in a large enterprise environment.

The adoption of microservices, facilitated by innovative practices such as the use of Kubernetes Operators and the implementation of the ODA architecture, showcases a strategic move towards flexibility, scalability, and efficiency. The challenges encountered during the scaling of DXL highlighted the necessity for a tailored approach to microservices within large enterprises and Vodafone specifically, leading to a deeper understanding and more sophisticated handling of such systems.

Through automation, streamlined deployment processes, and the addressing of technical and management challenges, Vodafone Greece has set a new standard for leveraging technology to enhance operational capabilities and deliver superior digital experiences. This journey not only underscores the importance of continuous innovation in the face of complex enterprise environments but also sets a blueprint for others facing similar challenges in the technology landscape.

We presented the CELL platform to the general public during the 22nd Athens Meetup.