VoiceOver in iOS Applications: Accessibility in Practice

VoiceOver is Apple’s built-in screen reader that enables users with visual problems to navigate and interact with iOS applications. In this article, we’ll briefly explain what VoiceOver is, how to embed accessibility support into your iOS apps, and how to test and use VoiceOver to ensure a more inclusive user experience.

What is Accessibility?

Accessibility refers to design and development of products and services that can be used by people with disabilities. It ensures that products are accessible to everyone without restrictions. The importance of accessibility is evident in features like accessible restrooms and crosswalk signals, which demonstrate how it should guide our design thinking.

Accessibility has been integrated into digital products, including mobile applications, to provide a seamless and inclusive experience for all users.

VoiceOver

VoiceOver is an accessibility feature for users with vision problems that works like a gesture-based screen reader. Apple states that "You can use iPhone even if you can’t see the screen."

The main features of VoiceOver are:

Navigation and interaction without the need to see the screen.

Auditory feedback for each visual element like battery level or incoming calls.

Additionally, it supports over 50 languages, making it accessible to users worldwide.

Teams at Vodafone take into account VoiceOver when designing and implementing new features.

Accessibility is embedded into UIKit and SwiftUI controls and views, making the user experience accessible by default.

UIKIT

Standard UIKit controls like UIButton, UILabel, UITextfield, are VoiceOver compatible by default. One thing to note is that UIImageView is not accessible by default

To make your custom element accessible to VoiceOver programmatically, define it as an accessibility element.

customView.isAccessibilityElement = true

SwiftUI

SwiftUI provides us with excellent accessibility support, so all elements like Text, Images are accessible by default.

Accessibility Labels

Accessibility labels provide a useful description for VoiceOver to read when the element is focused. If you want to be more explanatory or VoiceOver should read out something different then set the accessibilityLabel property on your elements.

UIKit

myButton.accessibilityLabel = "Press here"

SwiftUI

Button("Tap me") {

print("Tap")

}

.accessibilityLabel("Press here") Note: If your application supports localization, your labels need to be localized to different languages.

Accessibility Hints

AccessibilityHint provides additional context for the focused element.

UIKit

myButton.accessibilityHint = "Please press here for redirection"

SwiftUI

Button("Tap me") {

print("Tap")

}

.accessibilityHint("Please press here for redirection") Note: If your application supports localization, your labels need to be localized to different languages.

AccessibilityTraits

We use AccessibilityTraits to add new behaviour to visible elements. For example if we add a custom gesture to a view that makes it behave like a button, we should let VoiceOver know.

UIKit

agreeTitle.accessibilityTraits = .button

SwiftUI

Text("Tap me")

.accessibilityAddTraits(.isButton) Accessibility Notifications

A notification that an app posts when the layout of a screen changes. Using this notification VoiceOver can focus the element that we want.

UIKit

UIAccessibility.post(notification: .layoutChanged, argument: backButton) VoiceOver will focus the"backbutton" element.

SwiftUI

AccessibilityNotification.Announcement("Loading Photos View").post() Grouping Elements

Grouping UI elements simplifies user navigation in our app.

UIKit

self.accessibilityElements = [

errorMessageLabel,

TryAgainLabel ]

self.accessibilityLabel = ["\(errorMessageLabel.text)", "\(tryAgainLabel.text)"].joined(separator: " ")

self.accessibilityTraits = .button

SwiftUI

.accessibilityElement(children:) modifier is applied to a parent view, instructing it to combine its children into a single accessibility element.

VStack {

Text("Your score is")

Text("1000")

.font(.title)

}

.accessibilityElement(children: .combine) VoiceOver in the above example will read the two texts together with a short pause between them.

But if you want to read this as a single Text, you can go with the below approach.

VStack {

Text("Your score is")

Text("1000")

.font(.title)

}

.accessibilityElement(children: .ignore)

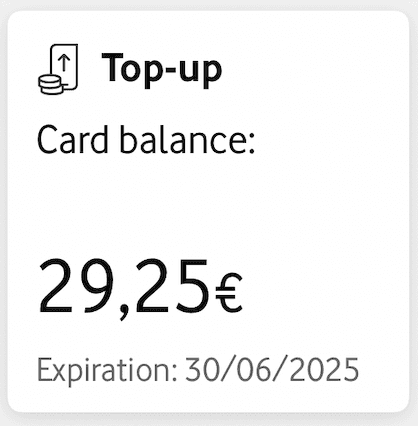

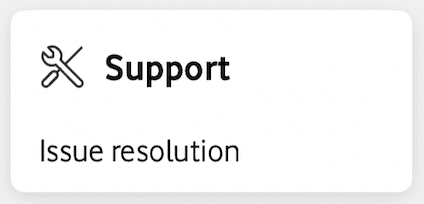

.accessibilityLabel("Your score is 1000") How users “listen" My vodafone application components

“Top-up, Card balance, Twenty nine euros and twenty five cents,

Expiration the thirty of the June 2025, Button"

“Support, Issue resolution,Button"

Challenges - Limitations

When adopting VoiceOver in your app, it's essential to consider both iOS application and server-side development. Remember, any content received from the server is not editable on the client side.

Another crucial point to keep in mind is the handling of abbreviations / units / symbols and formatter dates by Voice over.

It can handle many of these categories, however, their accuracy often depends on the context in which they are used.

The date format in an iOS app affects how VoiceOver reads dates. For example, "dd/mm/yyyy" might not be clearly interpreted by VoiceOver.

Example | How voice over says it | How voice over should say it |

10/02/2025 | “10 slash 02 slash 2025” | 10 February 2025 |

Additionally, when an iPhone is set to English language, VoiceOver effectively processes many common abbreviations, converting them into their full forms as it reads them aloud. For example, it will read "NOV" as "November." and “St.” as “street”. Nevertheless, depending on the application context, these acronyms may have multiple meanings.

Debugging

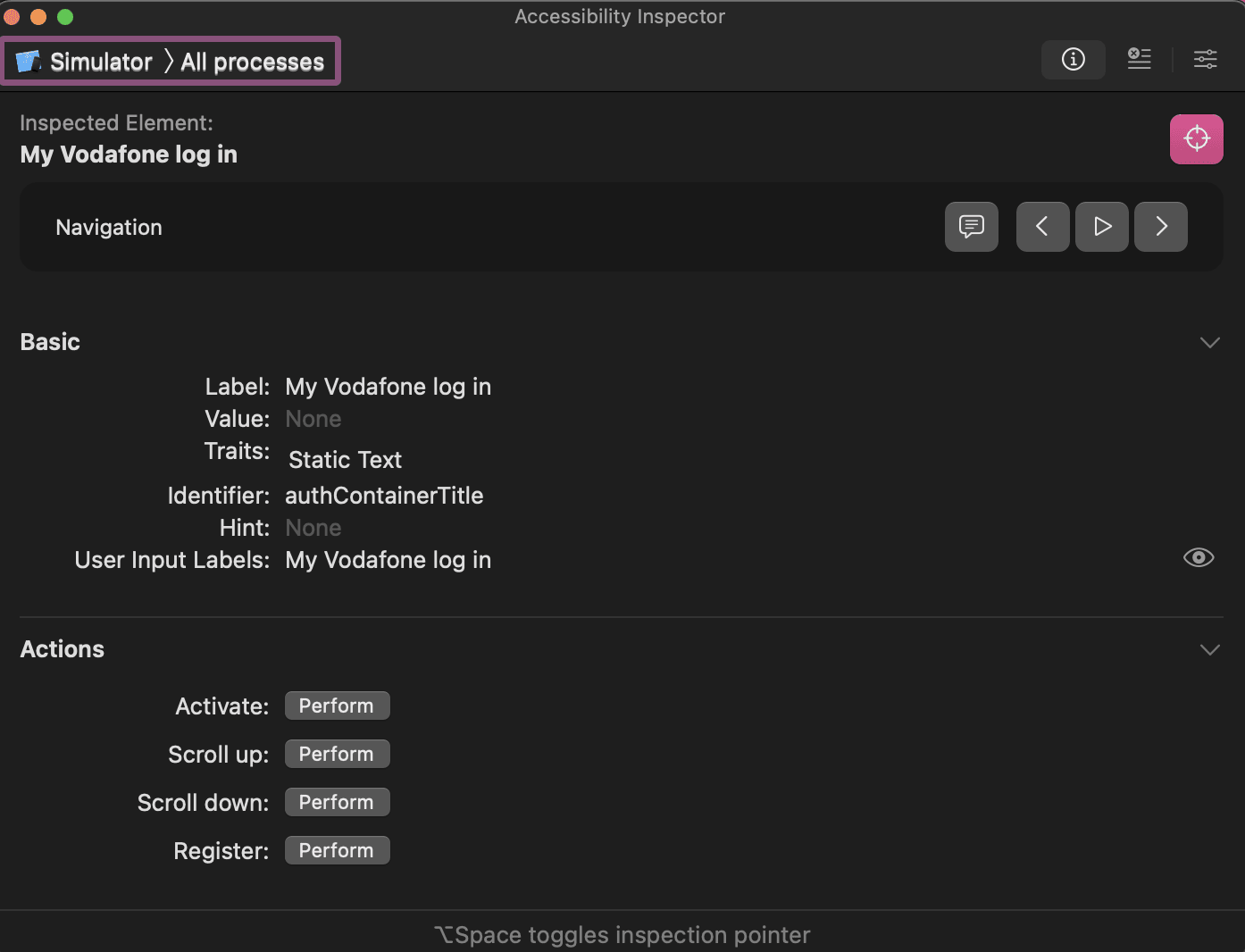

The most powerful accessibility debugging tool available to iOS developers is Xcode's "Accessibility Inspector".

With this tool, a developer can not only identify if the elements are accessible and in which way but also simulate the “voice over” behavior. It inspects each element of each screen and displays information about it, like what a user can hear when the “voice over” feature is enabled and which available interactions and properties this element has.

Conclusion

Accessibility features guarantee that people with disabilities may participate in and navigate the digital world on their own. The skills and needs of each user should guide the design and development of each application.

Thanks for reading!

Useful links:

https://developer.apple.com/accessibility/

https://www.apple.com/voiceover/info/guide/_1121.html

https://developer.apple.com/documentation/accessibility/inspecting-the-accessibility-of-screens

https://developer.apple.com/documentation/uikit/supporting-voiceover-in-your-app

https://developer.apple.com/documentation/swiftui/view-accessibility

https://support.apple.com/en-gb/guide/iphone/iph3e2e415f/ios